A Strategic Guide for AI Solutions Managers—from Ingestion to Monitoring

You’ve got the model. You’ve got the mandate. But what holds the entire AI system together? The pipeline.

Whether you’re forecasting fleet maintenance, detecting fraud in real time, or streamlining logistics, your machine learning system is only as good as the pipeline feeding it. And as an AI Solutions Manager, you don’t just need to understand the pipeline—you need to architect it with precision, scalability, and business outcomes in mind.

Let’s walk through the seven stages of a modern ML data pipeline, how they connect, and what you actually need to consider at each step.

🔗 From Raw Data to Real Value: The Pipeline Flow

The pipeline isn’t just a set of stages—it’s a system of decisions. Here’s how each part contributes:

| Stage | Purpose | Key Tools | Strategic Risk if Misaligned |

|---|---|---|---|

| Ingestion | Capture data at speed from many sources | Kafka, SQL, APIs | Latency, missing events, misaligned timestamps |

| Preprocessing | Clean, normalize, fill gaps | Spark, Pandas, DBT | Garbage-in-garbage-out, skewed model inputs |

| Feature Engineering | Turn raw data into meaningful signals | Embeddings, windowing, domain logic | Irrelevant signals, poor model generalization |

| Storage | Efficiently store data for reuse | Parquet, Delta Lake, NoSQL | Inaccessible or redundant data, cost blowouts |

| Modeling | Train predictive models | scikit-learn, PyTorch, XGBoost | Overfitting, misalignment with business KPIs |

| Serving | Deliver results into production workflows | FastAPI, ONNX, MLflow, Docker | Latency, scaling issues, integration friction |

| Monitoring | Detect drift, uptime issues, performance decay | Prometheus, Grafana, dashboards | Silent model failure, degraded user trust |

Now let’s explore each stage in more detail.

1️⃣ Ingestion: The Start of It All

Your pipeline starts with raw data in motion. This is where APIs, Kafka streams, SQL queries, and IoT sensors pump life into your system.

🔧 Use Case: A fraud detection model pulling transaction data in near real time.

⚠️ Common Pitfall: Misaligned timestamps between systems—causing models to “learn” incorrect sequences.

💡 Pro Tip: Build in retry logic and buffering. Design like your upstream is unreliable (because it probably is).

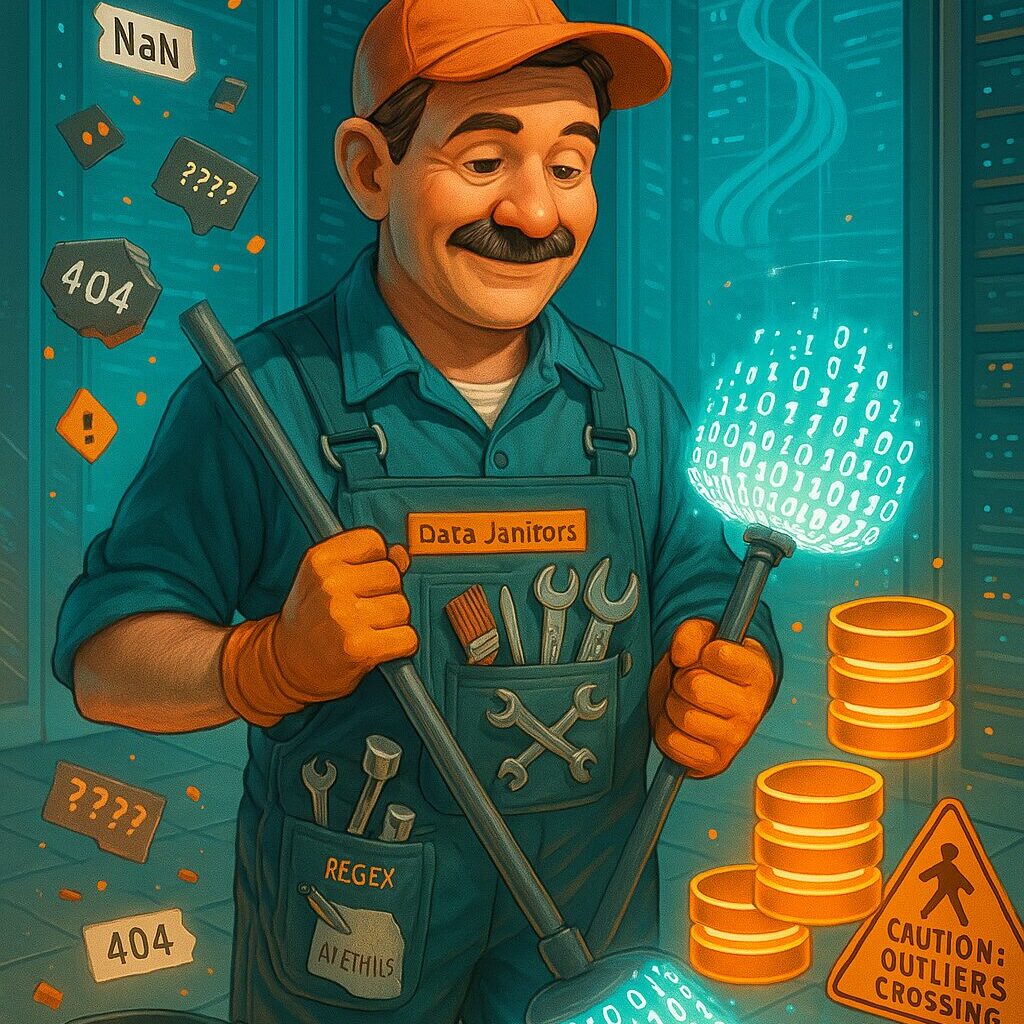

2️⃣ Preprocessing: The Data Cleanse

Before anything meaningful can happen, you need to normalize formats, impute missing values, and de-duplicate records.

🔧 Use Case: Manufacturing systems predicting part failure. Sensors may drop data; preprocessing fills the gaps.

⚠️ Common Pitfall: Over-engineered pipelines that become hard to debug.

💡 Pro Tip: Use DBT or Spark pipelines that are modular and versioned—easier to test, easier to trust.

3️⃣ Feature Engineering: Where the Magic Happens

This is where your domain knowledge and modeling intuition collide. You take raw columns and create signals—things your model can actually learn from.

🔧 Use Case: Transform clickstream logs into time-windowed session features for a recommendation engine.

⚠️ Common Pitfall: Relying too heavily on automated tools without domain input.

💡 Pro Tip: Work with SMEs (subject matter experts). Features built without business context are just noise.

4️⃣ Storage: Not Just a Data Lake—An Organized Warehouse

Good storage decisions reduce cost, improve access, and future-proof your pipeline. Bad ones slow everything down.

🔧 Use Case: Delta Lake for storing labeled imagery used in a quality control vision model.

⚠️ Common Pitfall: Storing high-cardinality data in row-based formats = 💸 + latency.

💡 Pro Tip: Use columnar formats (Parquet), index aggressively, and apply retention policies early.

5️⃣ Modeling: Where Models Get Trained (and Judged)

This is the sexy part—training your XGBoosts, CNNs, and Transformers. But remember: great modeling can’t save bad data.

🔧 Use Case: Predicting equipment downtime using historical maintenance logs.

⚠️ Common Pitfall: Overfitting to noisy features that looked good during training but don’t generalize.

💡 Pro Tip: Prioritize feature stability over leaderboard performance. Business trust > Kaggle scores.

6️⃣ Serving: Bringing AI into the Real World

You’ve got predictions—now what? Serving makes them usable. FastAPI, ONNX, and Docker help you deploy fast and flexibly.

🔧 Use Case: Retail product recommender updating every time a customer adds an item to cart.

⚠️ Common Pitfall: Ignoring batch needs—forcing everything into real-time increases cost without business gain.

💡 Pro Tip: Design for both real-time and batch serving. Match delivery to the value window.

7️⃣ Monitoring: Your AI Smoke Alarm

Production isn’t the finish line—it’s the test. Drift happens. Latency spikes. Inputs change.

🔧 Use Case: A logistics optimizer that starts misrouting due to fuel price changes not seen in training.

⚠️ Common Pitfall: Only monitoring system uptime—not model quality.

💡 Pro Tip: Track input distribution shifts, prediction confidence, and feedback loop accuracy.

🧠 Final Thought: Your Pipeline = Your Product

Building great ML systems isn’t about one perfect model—it’s about the system around the model. As an AI Solutions Manager, your job is to think holistically:

- Are the right data contracts in place?

- Is the pipeline modular enough for updates?

- Can your ops team troubleshoot failures at any stage?

✅ Action Step: Audit your current ML pipeline using the 7 stages above. Where are you most fragile? Where do you need better observability or process guardrails?

Because in the end, a brittle pipeline breaks trust. But a well-designed one? That scales value.